Goals

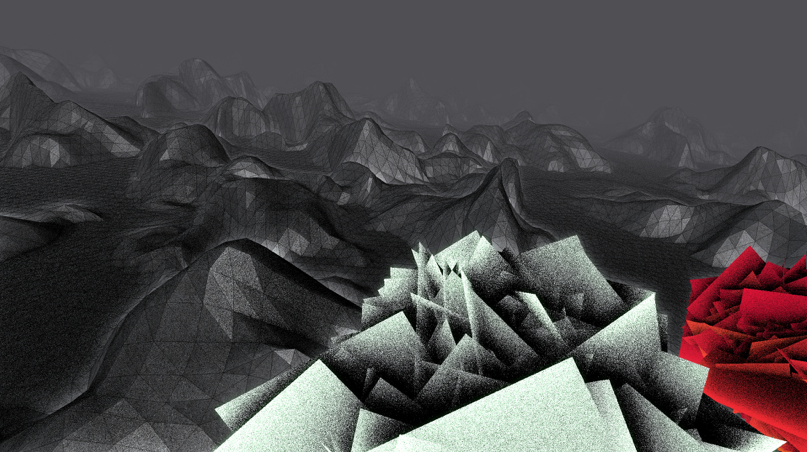

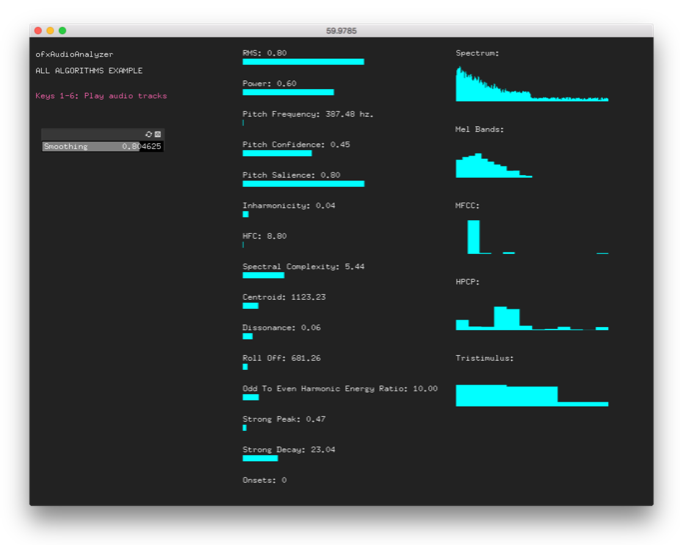

In this project, we try to create an appropriate music video in which interaction between visuals is driven by audio analysis, hence the term algorithmic driven visualisation. We achieve that through exploring different ways for analysis and algorithms which can be combined to create coherent visualisation. The exploration was done on creative coding frameworks, Processing and openFrameworks as programming environments and the final prototype on Unity3D. Some of the computational analyses were done using python and iPython Notebook, before being implemented for real-time analysis on openFrameworks.

Technology and Implementation

The final prototype was constructed by two applications that communicated in real-time. In openFrameworks, the sound analysis was done in real-time and Unity3d was taking care of the visualisations. The sound Controller was an extended version of the popular library, which can be found here as open-source project. Further, doing the visual in Unity, allowed me to explore different post-processing cinematic methods as well as advanced graphics such as shaders work.